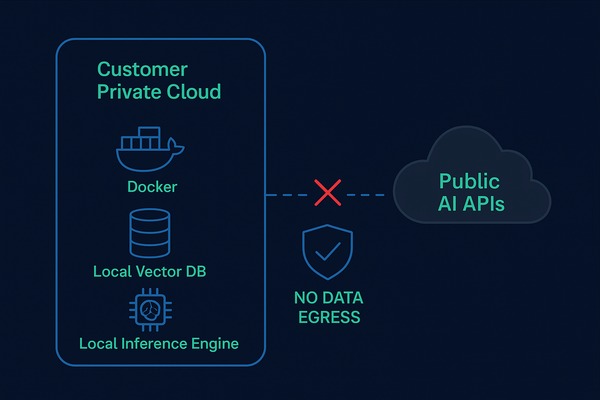

Secure, Private, and Sovereign. Deploy Zedly on Your Infrastructure.

The only self-hosted AI chat for businesses that runs entirely within your VPC or air-gapped environment. Zero data egress guaranteed.

Three Ways to Deploy Llama 3 Locally for Enterprise

Choose the deployment model that matches your security posture and compliance requirements.

Private Cloud (VPC)

Single-tenant deployment into your AWS or Azure environment via PrivateLink. Your data stays within your cloud perimeter with full network isolation and encryption at rest.

On-Premise (Docker)

We provide Docker containers and Helm charts. You run it on your bare metal or existing Kubernetes cluster. Full control over compute, storage, and network configuration.

Air-Gapped (Offline)

Full offline delivery via physical media or secure download. Ideal for SCIFs, Defense contractors, and highly regulated environments requiring air-gapped AI document search.

Local Vector Database for AI Document Chat

Our local AI solution for confidential documents uses a completely self-contained stack. No external API calls, no telemetry, no data leaving your network.

The air-gapped embeddings search system runs locally using Voyage AI models for semantic search, combined with your choice of Llama 3 or Mistral for inference. All components are containerized and can run on a single server or distributed cluster.

- Local Inference: Llama 3 (8B/70B) or Mistral models run entirely on your GPUs

- Vector Storage: Self-hosted Qdrant or Milvus for private contract analysis AI offline

- Embeddings: Local Voyage or E5 models for semantic document indexing

- Document Processing: Apache Tika + Tesseract OCR for PDF/DOCX/images

Hardware Requirements

Minimum specifications for running HIPAA compliant self-hosted AI in production.

| Scale | Model Size | Minimum GPU | RAM | Storage |

|---|---|---|---|---|

| Standard | Llama 3 8B | NVIDIA A10G (24GB) | 64GB | 1TB NVMe |

| Analyst | Llama 3 70B | NVIDIA A100 (80GB) or 2× A6000 | 128GB | 2TB NVMe |

Compatible with Ubuntu 22.04 LTS and Red Hat Enterprise Linux 8/9.

Industry Use Cases

Trusted by organizations that cannot compromise on data sovereignty.

Legal Discovery

Secure e-discovery and contract analysis without cloud exposure. Index privileged documents and run searches that never leave your firm's network.

Healthcare & Life Sciences

Full HIPAA compliance with zero data retention. Summarize clinical notes, analyze patient records, and support research workflows—all on-premise.

Manufacturing & Defense

Analyze factory logs, maintenance records, and compliance documentation locally. ITAR-compatible deployments for defense contractors.

Ready to Go Offline?

Get a custom deployment plan tailored to your infrastructure and compliance requirements.

Contact Sales for Licensing